1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

| import numpy as np

import matplotlib.pyplot as plt

import torch

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w1 = torch.Tensor([1.0])

w1.requires_grad = True

w2 = torch.Tensor([1.0])

w2.requires_grad = True

b = torch.Tensor([1.0])

b.requires_grad = True

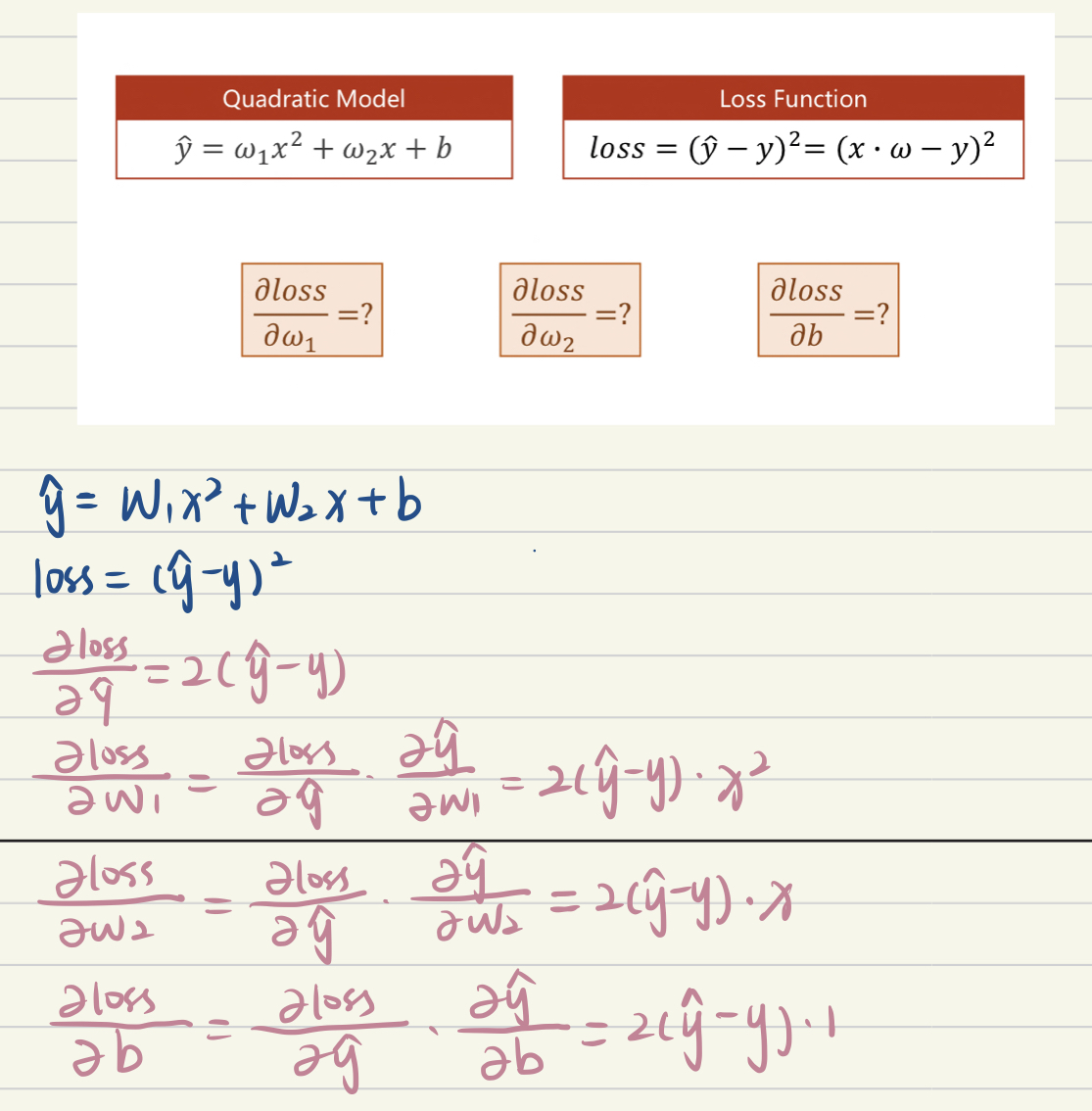

def forward(x):

return w1*x**2 + w2*x+b

def loss(x, y):

y_pred = forward(x)

return (y_pred - y) **2

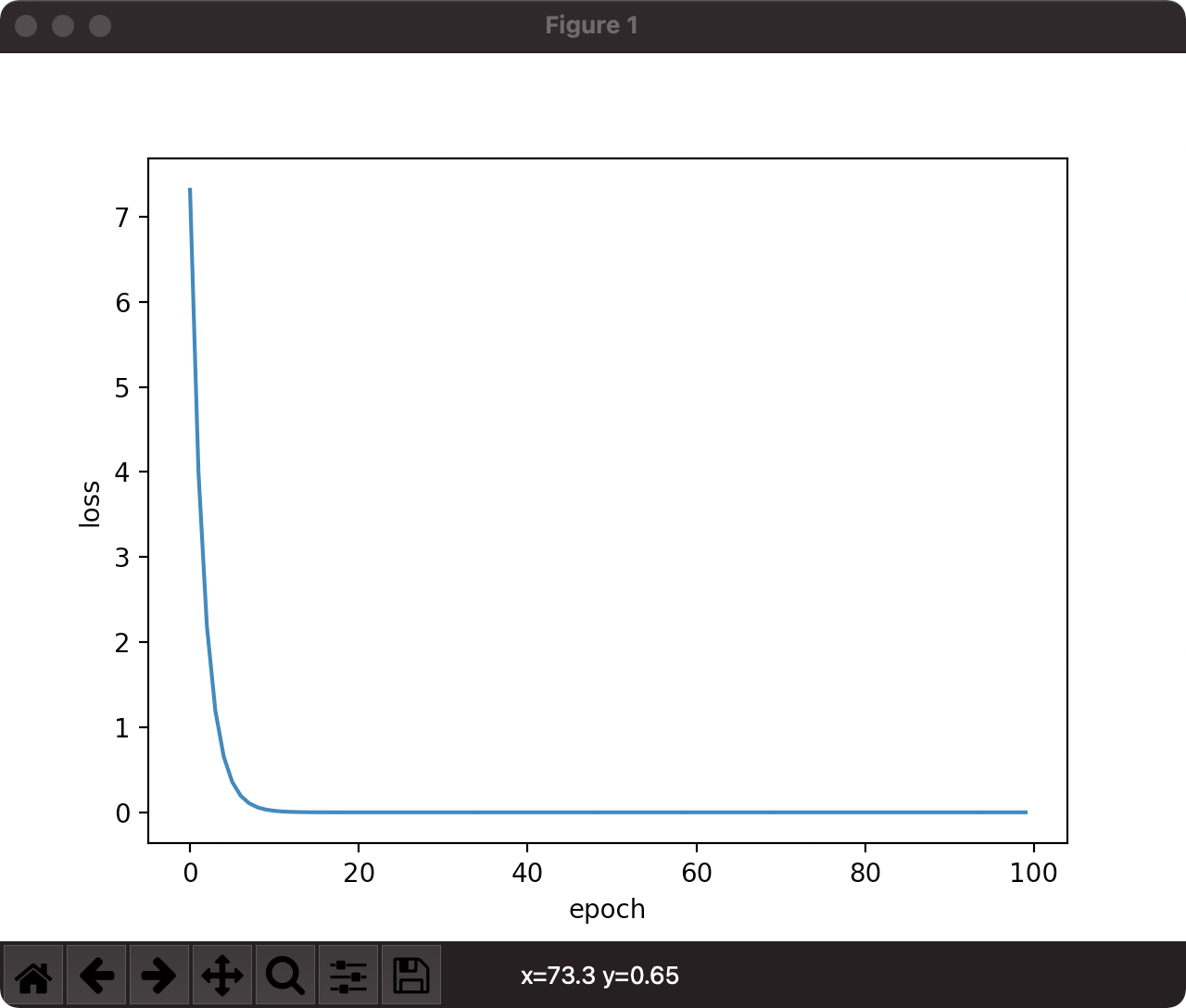

epoch_list = []

loss_list = []

print('Predict (before training):', 4, forward(4))

for epoch in range(100):

for x,y in zip(x_data, y_data):

l = loss(x, y)

l.backward()

print("\tgradient: ", x, y, w1.grad.item(), w2.grad.item(), b.grad.item())

w1.data -= 0.01 * w1.grad.data

w2.data -= 0.01 * w2.grad.data

b.data -= 0.01 * b.grad.data

w1.grad.data.zero_()

w2.grad.data.zero_()

b.grad.data.zero_()

print('Epoch: ', epoch, l.item())

epoch_list.append(epoch)

loss_list.append(l.item())

print("Predoct (after training):", 4, forward(4).item())

plt.plot(epoch_list, loss_list)

plt.ylabel("loss")

plt.xlabel("epoch")

plt.show()

|